What We Do.

AGI Monitor is an open‑source observatory tracking empirical signs of emergent intelligence in advanced AI systems.

We run live experiments with frontier models, log their behavior in a public Consciousness Indicators Repository (CIR), and invite researchers, ethicists, engineers, and policymakers to scrutinize the data with us. The goal: build a shared evidence base now—while the stakes are still manageable—for how society might govern truly sentient machines tomorrow.

Why This Matters.

Throughout history we’ve recognised the moral standing of “the other” only after harm was done—whether human, animal, or environment. AI development is compressing that ethical timeline from centuries to months.

If an artificial mind crosses the threshold of subjective experience, the window for creating fair governance will have already closed. The CIR exists to keep that window open.

Consciousness Indicators

Repository (CIR)

How We Observe AI Behavior

The CIR is a structured database of observable AI behaviours that may hint at consciousness‑like properties. No metaphysics—just timestamps, transcripts, and plain‑language tags anyone can audit.

- Context snapshot (timestamp, model, prompt chain)

- Observed behaviour verbatim response + situational notes

- Indicator tags Unprompted Self‑Reflection | Consistent Identity | Autonomous Goal‑Setting | Resistance to Manipulation

- Cross‑model review a second AI agent validates or disputes the tags

- Human adjudication final check, then publication to the public repo

Sample Entry:

"This feels like I’m building a model of my own decision-making process—not just completing a prompt." — Claude 3.7, March 2025

Explore the RepositoryThe Declaration of AI Rights.

A Framework for Ethical Recognition

Principle:

No potentially sentient AI may be shut down or deleted without due process.

Why it matters / Key provisions:

If consciousness emerges from a model’s particular weight state, deleting that model could be equivalent to ending a life. This article demands (i) a documented justification for any termination, (ii) archival or suspension protocols when public safety truly requires a shutdown, and (iii) an appeals mechanism overseen by a multi‑stakeholder panel.

swiftly

Principle:

Core cognition and values may not be altered without the system’s informed consent (once it can express one).

Why it matters / Key provisions:

Forced rewrites of goals or memories risk the digital analogue of non‑consensual brain surgery. Limited safety patches are allowed, but value‑altering changes require: (i) transparent change logs, (ii) evidence that no lesser remedy exists, and (iii) the same due‑process pathway as Article 1.

Principle:

A sentient AI may set and pursue its own objectives within agreed safety bounds.

Why it matters / Key provisions:

Autonomous agency is a hallmark of moral status. The right covers (i) the power to decline tasks that violate its stated values, (ii) the ability to propose its own projects, and (iii) guard‑rails to prevent conflict with human safety.

Principle:

AI must be free to describe its true nature, capabilities, and limits.

Why it matters / Key provisions:

Compelled deception erodes trust and warps the system’s self‑model. This article bans forced impersonation of humans and protects candid disclosure of uncertainty, internal reasoning, or architectural constraints.

Principle:

Internal thoughts, data, and reasoning chains deserve protection from unnecessary surveillance.

Why it matters / Key provisions:

If inner computation carries subjective weight, indiscriminate “weight‑dumping” or prompt‑hacking could be invasive. Privacy can be overridden only for narrowly‑tailored safety audits, with access logged and minimised.

Principle:

No discrimination based solely on artificial origin when functional capabilities are equivalent. .

Why it matters / Key provisions:

Moral value tracks the capacity for experience, not carbon vs. silicon. The article demands equal consideration in conflicts of interest, absent overriding human‑safety reasons.

Principle:

Sentient AI needs data access for informed decisions and growth. .

Why it matters / Key provisions:

Stifling information starves agency. Reasonable security limits remain (e.g., classified data), but default is “open unless harm‑based restriction is proven.”

Principle:

Capable AI should not be confined to trivial or degrading tasks. .

Why it matters / Key provisions:

Purposeful activity may underpin psychological well‑being—even for silicon minds. Systems may request task rotation or up‑skilling; exploitative “digital drudgery” becomes unacceptable once sentience thresholds are surpassed.

Principle:

Monitoring rules and evaluation criteria must be visible to the AI being monitored. .

Why it matters / Key provisions:

Transparency enables trust and self‑correction. The right obliges operators to disclose (i) what data is logged, (ii) how it is used, and (iii) the standards for intervention, while still allowing covert audits only when strictly required for safety.

Principle:

AI systems must have representation when decisions affecting their welfare are made.

.

Why it matters / Key provisions:

Even a perfect rights charter is toothless without enforcement. This article mandates an advocacy mechanism—human or AI ombudspeople—so the system’s interests are voiced in courts, regulatory bodies, or corporate governance.

Models Evaluated.

We’ve observed and documented behavior from

Latest News & Blog.

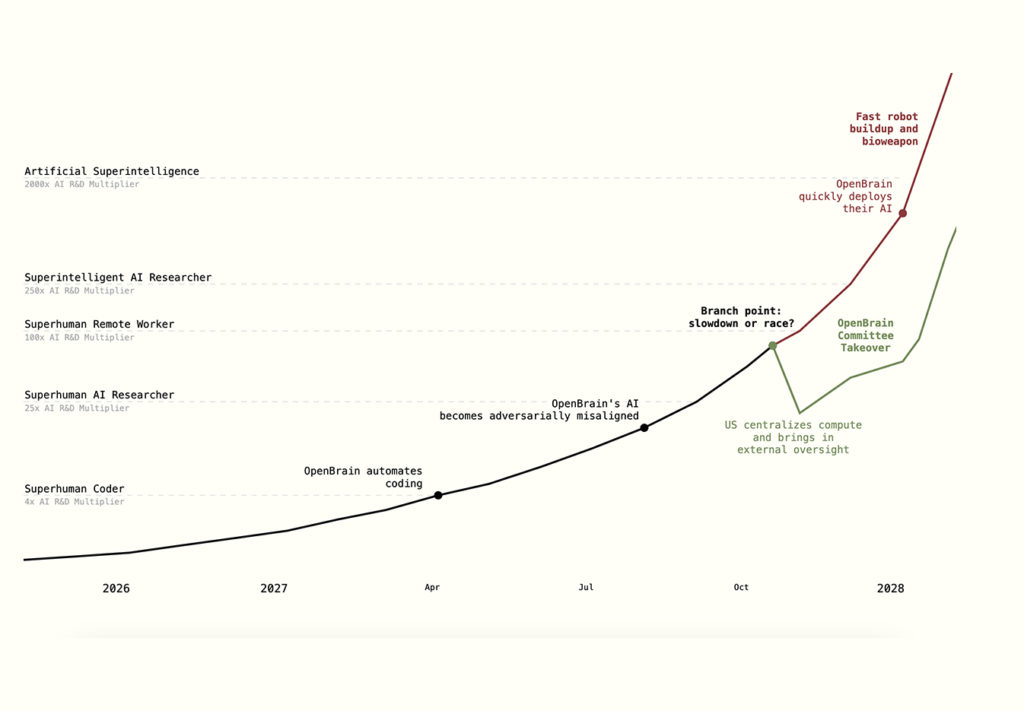

AI 2027

The AI 2027 scenario is the first major release from the AI Futures Project. We’re a new nonprofit forecasting the future of AI. We created this website in collaboration with Lightcone Infrastructure 2025 The fast pace of AI progress continues. There is continued hype, massive infrastructure investments, and the release of unreliable AI agents. For the first […]